James Pritchett December 11, 2023

Micro frontends are one of the hottest modern architecture paradigms for creating distributed web applications where the content creation responsibility is spread out over various teams. Changing content with hard-coded frontends requires coordination between many teams. Being able to dynamically insert content at a dynamically created route and expose it in a dynamically generated menu is a game changer, empowering any stakeholder and requiring little cross-team involvement.

Organizations that implement micro frontends are able to manage UX consistency and brand identity across multiple dev teams. With more resources aligned to boost the velocity and time to market for web development, one should be able to build a great website that’s rich in compelling web experiences, right? Potentially – but that won’t matter if nobody can find it on the web. Search is still crucial for digital businesses, and the dynamic nature that makes micro frontends so appealing can also be their Achilles’ heel when it comes to SEO.

The good news here at Nuvalence is, we’ve cracked the code on this for one of our clients. In this article, I’ll tell you how we did it.

Timing is Everything

The vulnerability of using micro frontends is revealed when companies need to add relevant brand copy and SEO content for social media links. A dynamically generated route is only visible to the crawler if the URL is known at the time the crawler loads the site. Similarly, links posted on social media rely on metadata available in the initial response.

In our example, Google’s crawlers are unable to properly render asynchronously-loaded content, so relying on micro frontends written in JavaScript frameworks to update page content and meta tags doesn’t work. For details on why, see Google’s documentation on JavaScript SEO, but bottom line: lacking a presence in Google could be a major blow to any modern business.

Overcoming this is therefore an imperative, and it demands a creative solution. In a static website or one without dynamically-inserted content, one can leverage server-side rendering (SSR) to alleviate this issue by having the server give a full response, including SEO tags, without JavaScript. In our dynamic, asynchronously-loaded JavaScript app, we have to find a different way. Let’s start by assessing what we’re working with.

Understanding the Application Architecture

Content micro frontends can be written in React, Vue, or Angular. In this example, the application uses React as the host and scaffolding for the content. The host and the content all leverage the webpack build system using the module federation plugin. Webpack is the build system already included in React and Vue frameworks by default, but is obfuscated through utilities like create-react-app and Vue CLI. The module federation plugin converts components into modules that can be lazy-loaded into the document object model (DOM) and act like a first-class React component. Using this plugin, a content team can create dozens of content components, expose each of them as individual modules and publish them to a vanity URL owned and managed by that team. The host will dynamically load the modules as needed.

At runtime, the host application accesses a store of available content micro frontends and uses the published metadata to dynamically build the routes and menus, and to lay out the pages. This content can change hourly. Coordinating compilation and assembling the site content so that it can be rendered server-side is not possible with the tools available today.

Making SEO Available to the Crawler

We need to make the crawler see what we want it to see, which is the correct meta tag content and page title even if JavaScript is off. A server-side rendered application would return all the content, SEO tags, and page titles in one package, but as previously stated, our client’s content is loaded dynamically.

We developed a two-phase solution that will inject the SEO data into the index file at render time. Our solution uses dynamic rendering that produces a snapshot of the HTML as seen the last time the crawler loaded the page – an almost exact copy of the client-side rendered content for crawlers, save for any recent text edits. Dynamic rendering does not rely on JavaScript. This approach satisfies our client’s requirements and most importantly, does not violate SEO cloaking as per Google’s documentation. Cloaking would be considered a malicious act of displaying different content to crawlers from what is displayed to end users.

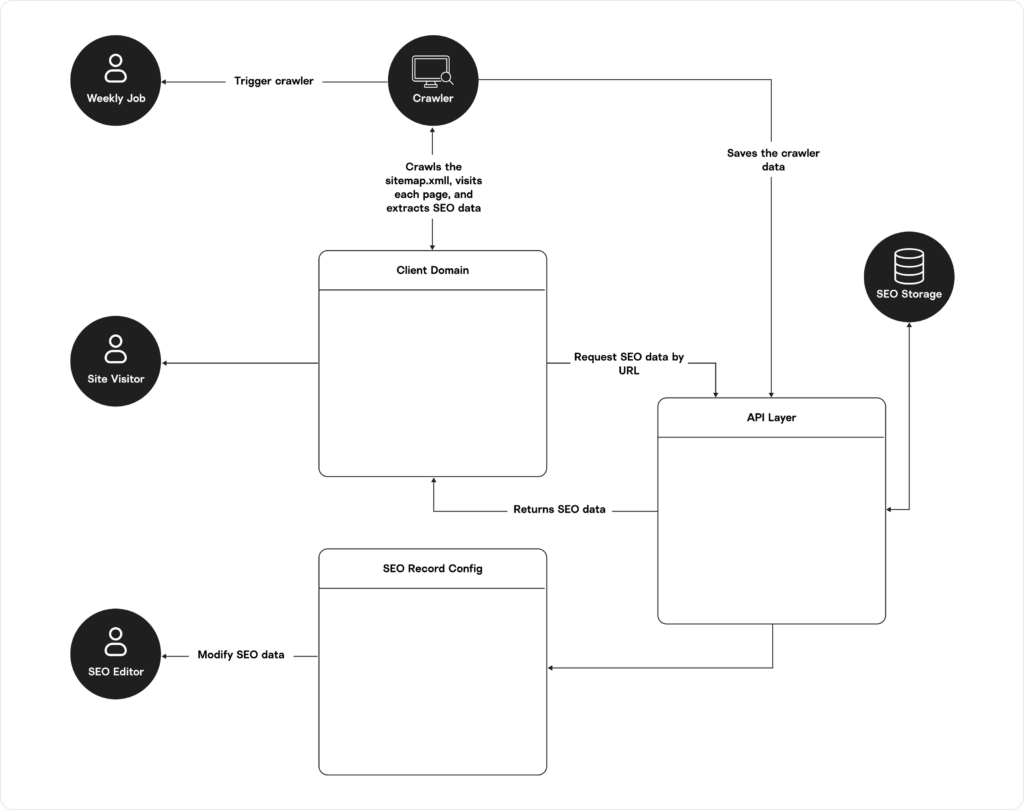

Our solution includes SEO data for all the page content in a central location; an ExpressJS server that serves the site; and a crawler to populate the SEO data store.

Phase 1: Capturing and Saving Page Content

The sitemap.xml is dynamically generated from the cached and published data, in the same store that generates the available routes. This ensures parity between available URLs and the sitemap. Using puppeteer, a weekly cron job downloads the sitemap.xml document and crawls each URL found within. The page title, meta description, and page content are captured and saved into the same data store. Content publishers can make any other changes they want – the weekly crawler will capture all of this data and update it. Any links found in the body content that are not captured in the sitemap (such as deep routes not controlled by the host application) will also be found and added to the SEO data store.

Phase 2: Rendering Metadata for SEO Crawlers

The React application is served by an ExpressJS backend with access to the data store populated by the crawler. Requests to the backend include the requested path – information that makes it simple to retrieve the SEO data from the data store for a specific URL.

Once the data is retrieved, the meta tags, open graph tags, page title, and the last snapshot of the body HTML are rendered onto the index endpoint. The backend does not detect crawlers, but rather, renders this content even if a user follows this path in the browser. Not conditionally rendering the content allows social media linking to take advantage of the same meta tags as the search crawlers. If loaded in a browser, the snapshot body content is replaced when React takes over the render.

Result: Express App Returns Populated SEO Content

When the user loads a page directly in a browser (from a deep link, etc.), the express app finds the SEO data for that URL and serves up valid HTML to the browser. The React/Vue/Angular framework then takes over and the process continues as before. When the user navigates around the site, the title and description are dynamically updated by content teams via Helmet, so the net effect for browser clients is still a valid site.

When the search crawlers load a page, the express app finds the SEO data for that URL and returns valid HTML to the crawler. The crawler will never do the same navigation that the browser does, so each URL is a new instance – and therefore a new request/response to/from express.

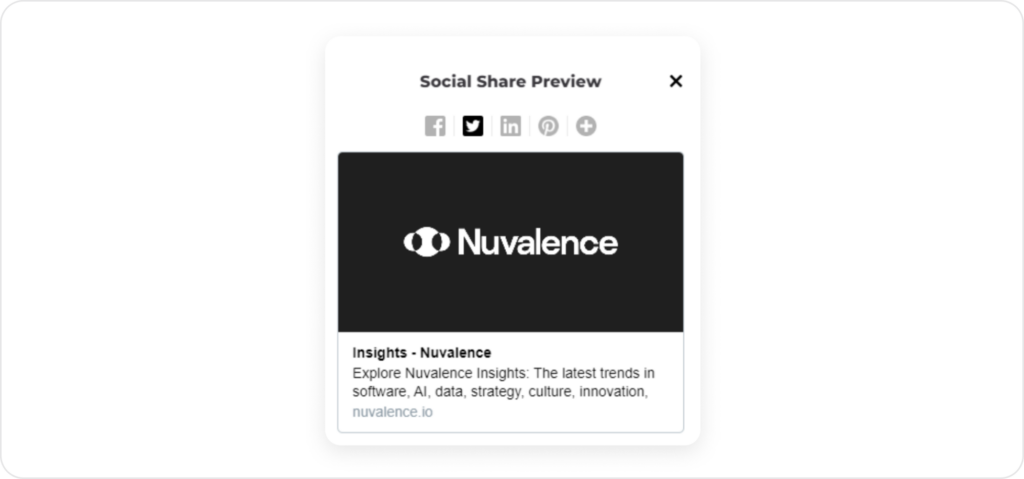

When a user adds a link from the website to a social media site, the express app finds the SEO data for that URL and returns populated meta tags and open graph tags to that site’s link renderer.

A Major Win for Micro Frontend Fans

Our client’s site has over 400 pages, with more coming all the time. This platform has domains in 8 EU countries and all of North America. From the beginning of May 2023 (when the first pass of this solution was implemented) to the beginning of October 2023, the total clicks and impressions have improved showing remarkable gains. We have approached a 300% increase in clicks and 400% increase in impressions.

These results show how essential SEO is, even in today’s AI-disrupted digital market. By finding a better way to achieve client-side dynamic rendering with full support for Google’s SEO crawler (our focused goal here), as well as support for linking to Twitter, Facebook, LinkedIn, and others, search results performance has drastically improved with steady month-over-month gain.

This is welcome news indeed for our client and their digital strategy. Improved search performance helps to support their investment in developing such a robust website, as well as their marketing campaigns that drive to the site and engage visitors across hundreds of targeted pages. With micro frontends helping to deliver a consistent, compelling web experience in every interaction, our SEO solution ultimately bolsters their ability to showcase the diverse range of products, services, and initiatives that demonstrate their innovation and industry leadership.